Nearly all of the new camera features of Google’s Pixel 10 Pro lean on artificial intelligence. When you use Pro Res Zoom to zoom in at 100x, for example, the Pixel Camera uses generative AI to recreate a sharp, clear version. Or when you’re taking photos of people, the Auto Best Take feature melds multiple shots to create an image where everyone looks good.

But Google added another low-level feature to the Pixel 10 line, C2PA content credentials, that isn’t getting much attention. C2PA, or the Coalition for Content Provenance and Authenticity, is an effort to identify whether an image has been created or edited using AI and help weed out fake images. AI misinformation is a growing problem, especially as the systems used to create them have been rapidly improving — with Google among those advancing the technology.

Don’t miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

Apple, however, is not part of the coalition of companies pledging to work with C2PA content credentials. But it sells millions of iPhones, some of the most popular image-making devices in the world. It’s time the company implemented the technology in its upcoming iPhone 17 cameras.

Identifying genuine photos from AI-edited ones

C2PA is an initiative founded by Adobe to tag media with content credentials that identify whether they’re AI-generated or AI-edited. Google is a member of the coalition. Starting with the Pixel 10 line, every image captured by the camera is embedded with C2PA information, and if you use AI tools to edit a photo in the Google Photos app, it will also get flagged as being AI-edited.

When viewing an image in Google Photos on a phone, swipe up to display information about it. In addition to data such as which camera settings were used to capture the image, at the bottom is a new “How this was made” section. It’s not incredibly detailed – a typical shot says it’s “Media captured with a camera” — but if an AI tool such as Pro Res Zoom was used, you’ll see “Edited with AI tools.” (I was able to view this on a Pixel 10 Pro XL and a Samsung Galaxy S25 Ultra, but it didn’t show up in the Google Photos app on an iPhone 16 Pro.)

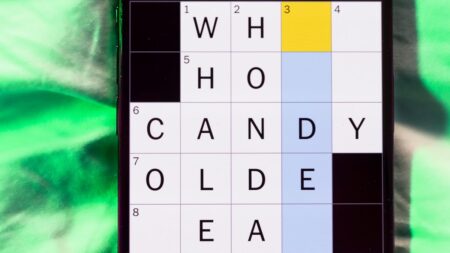

Enlarge Image

A photo captured by the Pixel 10 Pro XL includes C2PA information indicating that AI tools were used, in this case Pro Res Zoom, which uses generative AI to rebuild an image zoomed at 100x.

As another example, if you edit a photo after taking it using the Help me edit field to replace the background of an image, the generated version also includes “Edited with AI tools” in the information.

Enlarge Image

Using Google’s descriptive editing tool in Google Photos adds the “Edited with AI tools” indicator because the background has been replaced with an AI-generated one.

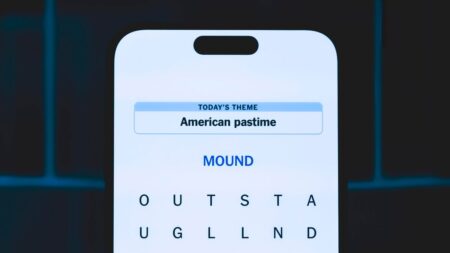

To be fair, AI has a role in pretty much every photo you take with a smartphone, given that machine learning is used to identify objects and scenes to better merge bursts of exposures that are captured when you tap the shutter button. The Pixel 10 flags those as “Edited with non-AI tools,” so Google is specifically applying the AI tag to images where generative AI is at work. So far, the implementation is inconsistent: A short AI-generated clip I made using the Photo to Video feature in Google Photos on the Pixel 10 Pro XL shows no C2PA data at all, though it does include a “Veo” watermark in the corner of the video.

These video frames were AI generated from a still photo (left), but because the result is a video, Google Photos isn’t showing a C2PA tag.

What’s important is that the C2PA info is there

But here’s the key point: What Google is doing is not just tagging pictures that have been touched by AI. The Camera app is adding the C2PA data to every photo it captures, even the ones you snap and do nothing with.

The goal is not to highlight AI-edited photos. It’s to let you look at any photo and see where it came from.

When I talked to Isaac Reynolds, group product manager for the Pixel cameras, before the Pixel 10 launch, C2PA was a prominent topic even though in practical terms the feature isn’t remotely as visible as Pro Res Zoom or the new Camera Coach.

“The reason we are so committed to saving this metadata in every Pixel camera picture is so people can start to be suspicious of pictures without any information,” said Reynolds. “We’re just trying to flood the market with this label so people start to expect the data to be there.”

This is why I think Apple needs to adopt C2PA and tag every photo made with an iPhone. It would represent a massive influx of tagged images and give weight to the idea that an image with no tag should be regarded as potentially not genuine. If an image looks off, particularly when it involves current events or is meant to imitate a business in order to scam you, looking at its information can help you make a better-informed choice.

Google isn’t an outlier here. Samsung Galaxy phones add an AI watermark and a content credential tag to images that incorporate AI-generated material. Unfortunately, since Apple is not even listed as one of the C2PA members, I admit it seems like a stretch to expect that the company would adopt the technology. But given Apple’s size and influence in the market, adding C2PA credentials to every image the iPhone makes would make a difference and hopefully encourage even more companies to get on board.

Google’s New Pixel Studio Is Weirdly Obsessed With the iPhone

See all photos

Read the full article here